[Paper] Not all domains are equally complex: Adaptive Multi-Domain Learning

We propose an adaptive parameterization approach to deep neural networks for multi- domain learning.

We propose an adaptive parameterization approach to deep neural networks for multi- domain learning.

Abstract

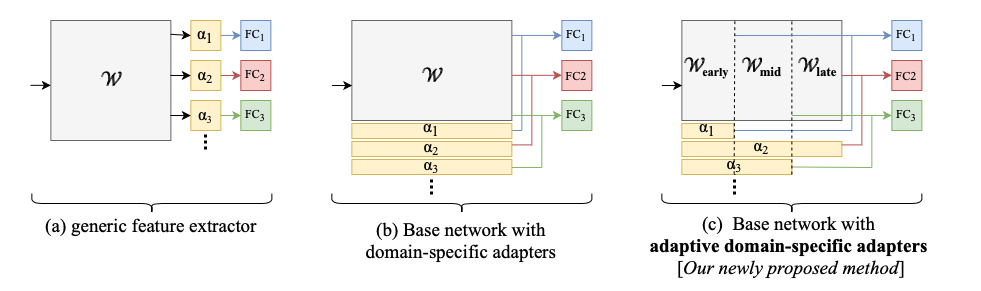

Deep learning approaches are highly specialized and require training separate models for different tasks. Multi- domain learning looks at ways to learn a multitude of different tasks, each coming from a different domain, at once. The most common approach in multi-domain learning is to form a domain agnostic model, the parameters of which are shared among all domains, and learn a small number of extra domain-specific parameters for each individual new domain. However, different domains come with different levels of difficulty; parameterizing the models of all domains using an augmented version of the domain agnostic model leads to unnecessarily inefficient solutions, especially for easy to solve tasks. We propose an adaptive parameterization approach to deep neural networks for multi- domain learning. The proposed approach performs on par with the original approach while reducing by far the number of parameters, leading to efficient multi-domain learning solutions.

Accepted to the International Conference on Pattern Recognition (ICPR’2020)

Post by: Ali SenhajiContact me for comments, thoughts and feedback.